Early last year we completed a massive migration that moved our customers’ hosting data off of a legacy datacenter (that we called FR-SD2) onto several new datacenters (that we call FR-SD3, FR-SD5, and FR-SD6) with much more modern, up-to-date infrastructure.

This migration required several changes in both the software and hardware we use, including switching the operating system on our storage units to FreeBSD.

Currently, we use the NFS protocol to provide storage and export the filesystems on Simple Hosting, our web hosting service, and the FreeBSD kernel includes an NFS server for just this purpose.

Problem

While migrating virtual disks of Simple Hosting instances from FR-SD2, we noticed high CPU load spikes on the new storage units.

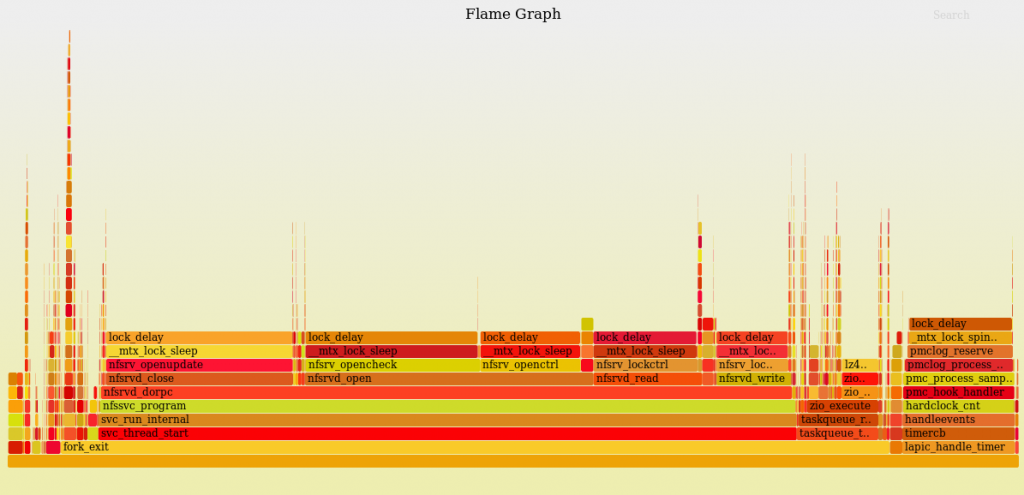

To understand what was going on we ran pmcstat(8) to generate flamegraphs. We saw that the load was due to lock contention in the kernel part of nfsd(8).

Using dtrace(1), we analyzed the requests that were triggering this lock contention and added missing NFS metrics. For instance, we were able to list top talkers per type of operation.

Then we realized too late that the NFS server was not scalable enough to handle our workload.

Solutions

Since the load spikes were happening with increasing frequency, we had to find a solution pretty quickly.

Five years after having launched our web hosting product, we questioned whether using NFS for Simple Hosting instances was really the right choice.

Would exporting data through block devices, like on our IaaS product (Gandi cloud), be possible? It seemed like it was but it would mean removing some customer-facing features such as access to snapshots through SFTP. In order to keep all features, we had to stick with plain filesystem exports.

Given that the code is pretty hard to understand, that any bug would impact the entire system, solving the load issue by fixing the kernel code seemed too complicated and risky, especially with customer data on the line.

Nonetheless, we decided to give it a try. As a first step, we made sure the kernel part of the NFS server was built as a dynamically loadable kernel module with kld(4) so that we could reload it without risk and without having to reboot the whole system.

We upstreamed some patches to fix reloading issues with the dynamic NFS module.

At that point we found many potential lock contentions in the code that could explain our load issues; most of them were related to delegations and since fixing these contentions would require rewriting most of the code, we tried to completely disable delegations.

An important lock contention related to delegation was still present even after disabling delegations: a lock was taken when processing a GETATTR NFS operation even though no write delegations had been granted, which seemed completely useless.

GETATTR is used to retrieve file attributes on NFS (e.g. when using stat(1) on a file) and according to our metrics is the most frequent operation. We proposed a patch that was accepted upstream to reduce this contention.

Even after all that, our load issue was still present… The biggest lock contentions were still there, all related to access conflict checks, so we reworked our internal architecture to only have a single NFS client per export in an attempt to remove all the access conflict code. Since this was a pretty risky solution, we started looking for alternative solutions in parallel.

We discussed using Samba (for the SMB protocol) to replace NFS, but since the scalability potential of this solution was low, we didn’t push that option further.

NFSv4 supports delegations and had been in production for a while without major issues, so it looked safest to stay on this.

We found the NFS-Ganesha project while searching for alternative solutions. It’s a userspace server implementation of the NFS protocol and supports all the features we need: NFSv4/v4.1 and delegations.

We figured that running this kind of server in userspace would be a huge boon to us since it would allow us to roll updates just by restarting the service and without having to reboot the whole system.

Unfortunately, the project did not support FreeBSD anymore, although a code base for compatibility was still present, it hadn’t been updated for many years and was partially obsolete.

We had to update and complete the port of FreeBSD in order to use it fully.

NFS-Ganesha

At first try, we were unsuccessful at compiling the code. We had to fix some function calls to make them compatible with FreeBSD. For instance, getaddrinfo(3) isn’t implemented the same way on Linux as it is on FreeBSD. According to the FreeBSD manual, one key difference is that “all other elements of the addrinfo structure passed via hints must be zero or the null pointer.”

We also need to use gai_strerror(3) instead of strerror(3) in order to print errors from getaddrinfo(3).

After making these adjustments, the code compiled successfully. However, we noticed that special syscalls were required to make Ganesha work. These syscalls were not implemented in vanilla FreeBSD, and before talking about them you need to know the main requirements of any userspace NFS server.

In order to implement a multithreaded userspace file server, we needed to access filesystems using various credentials (user/groups) which are requested by clients through the NFS protocol.

But, according to POSIX standards, all threads within a process must share the same credentials. On Linux, credentials are implemented on a per-thread basis and syscalls only change the current thread’s credentials. But the GNU libc keeps POSIX compatibility and wraps these syscalls to apply any change to all the threads of the current process.

On FreeBSD, credentials are also implemented on a per-thread basis but syscalls do change credentials of all threads within the process, thus preventing changes for a single thread.

The NFS protocol uses “file handles” to uniquely identify files on a system and it is required to have some syscalls related to file access through these file handles.

For example, the Linux kernel implements the open_by_handle_at(2) syscall which allows you to open a file relative to a file descriptor and with a given file handle. We could not find a similar syscall on FreeBSD, so we had to implement our own.

To do that, we based our code on an existing sys call, getfh(2), which already does part of the work: it only doesn’t do the file descriptor relative part.

As we mentioned, there was already a code base for FreeBSD in NFS-Ganesha. In fact, a first port had been done a few years ago. This port was actually done on a modified version of FreeBSD and the related changes were not public and not upstreamed in the official sources.

To implement the missing syscalls we used a nice feature from FreeBSD that lets us add syscalls through old modules.

We released these modules on Github and we’re working on including these syscalls in the official kernel sources.

In order to verify the changes we made for FreeBSD compatibility, we ran many tests at the NFS protocol level using available tools (NFStest, pynfs). We checked that pynfs results are similar on Linux and FreeBSD and that there was no regression when running NFStest’s POSIX tests.

Once these were validated, we checked if the features we needed were available and made the required changes to them (direct access to snapshots, dynamic add/remove of exports, etc.)

After that, we conducted performance tests on NFS-Ganesha (parallel I/Os from many clients, access to large directories and files) and compared them to the kernelspace server for validation.

During testing, we noticed that NFS-Ganesha was consuming CPU even without any operation in progress. Upon investigation, we found some bugs in NTIRPC related to timers (time unit conversion issues) in the FreeBSD compatibility code.

NTIRPC is the library used for the RPC implementation used by the NFS protocol.

We found many memory leaks that were due to different implementations of the pthread library between FreeBSD and Linux. Objects used in the pthread library are dynamically allocated on FreeBSD whereas they are allocated by the user on Linux.

If these objects are not properly destroyed in FreeBSD, there will be memory leaks. All these fixes have been pushed in the official repositories.

Once all of this was stable, we created two FreeBSD ports to generate packages for NFS-Ganesha and required syscalls. These ports are now officially available.

Migration

Once packages had been built, we started deploying this solution in production by spawning a new storage unit.

We were able to validate it within a few months and fix issues as they arose (new memory leaks, cache being very large).

In order to migrate existing storage units to NFS-Ganesha, we had to unmount all exports on clients because of the differences in the file handle structure between NFS-Ganesha and the FreeBSD kernel server. Otherwise, file accesses would return errors.

We had to shut all instances related to the impacted storage unit, stop the nfsd(8) service, generate the NFS-Ganesha configuration file through our API, start NFS-Ganesha and then relaunch all instances.

The procedure being pretty intensive, it took us weeks to progressively migrate all of our storage units.

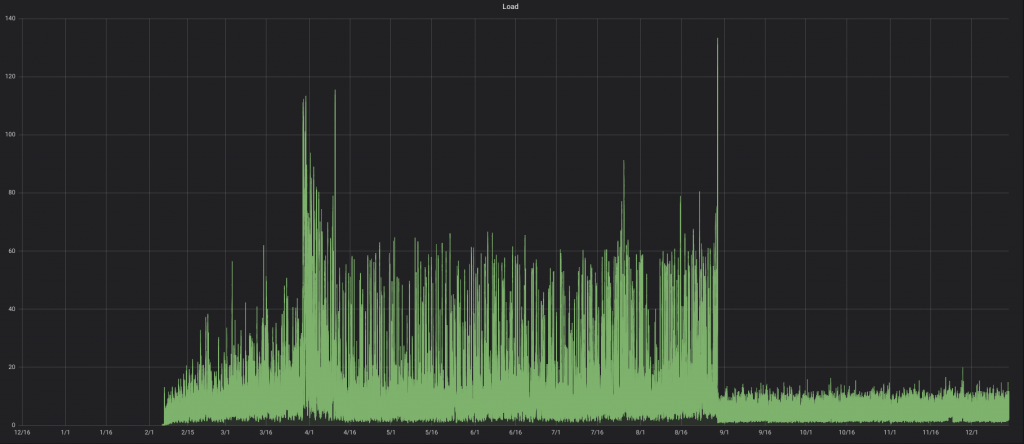

And then… the impact on the load average of storage units is crystal clear:

Note: we leave it to the reader to figure out when the migration to Ganesha happened.

Conclusion

We finally solved our load issue on our storage units, and surprisingly it performs much better than the kernel server and we now enjoy even more benefits thanks to NFS-Ganesha.

Although Linux and FreeBSD may look the same since they share UNIX roots, we found that there are many differences when we looked deeper into them.

People at Red Hat are working hard to better integrate Ceph and Kubernetes with NFS-Ganesha which looks promising for the future.

Fatih is a system engineer at Gandi based in our Paris office. If you have any questions, feel free to reach out on twitter @gandinoc.

We are hiring!

You want to help us keep on improving the reliability and the performances of our cloud infrastructure? You are passionate about storage technologies and want to involve yourself in open source ecosystem? Join us! We are hiring system developers, you can access here to our available positions (in French).

Tagged in Simple Hosting